Published Date : 2019年10月17日6:04

架空のクラウドソーシング案件に挑戦してみる(3-1)

Try a Fictional Crowdsourcing Deal(3-1)

This blog has an English translation

架空のお仕事をしてみる企画(3)です。

It's a project to try a fictitious job(3).

仮に自分がフリーランスで、 ある依頼者から適当な仕事を振られてみたら、 果たしてクリアできるのか?といった企画。

If i am a freelance programmer, When a client assigns a suitable job, Can I clear it? That's the plan.

この企画は架空のものですが、日本のクラウドソーシング市場に氾濫しているよくある案件と値段と工数を参考にしてます。

This project is a fictitious one, but it is based on common cases, prices and man-hours flooding the Japanese crowdsourcing market.

依頼者からの要望

Request from the client

仕事の依頼内容は、航空券価格クローリングです。

My job assignment is airline ticket price crawling.

Requierments

1 |

指定された複数の航空券予約サイトから、指定された路線区間・出発日の一ヶ月間の航空券価格データをクローリングする。 The requirements is to crawl the airline ticket price data for a month from the designated route section and departure date from designated ariline ticket booking sites. |

|---|---|

2 |

支払い金額は10000円です。 The payment amount is 10000 yen.($100) |

3 |

期間は5日以内です。 Please finish it within 5 days. |

4 |

納品物はソースコードとクローリングのデータです。 Deliverables are source code and crawling data. |

対象URL

Target URL

対象URLは全部で6つ。 実在の航空券予約サイトだが、一応プライバシー保護の為隠す。

There are a total of six URL's. It's an actual airline ticket booking site, but it's hidden for privacy.

試しに6つのサイトでそれぞれ検索をかけてみた。 URLに変化があるのは5つ。そのうち一つは変化なし。

I tried searching each of the six sites. There are five URL changes. One of them has not changed.

1 |

https://spamham.ham

|

|---|---|

2 |

https://spam.spam

|

3 |

https://spam.egg

|

4 |

https://ham.spam

|

5 |

https://bacon.egg

|

6 |

https://wonderful.spam

|

ツール制作開始。

Start tool production

パラメーターに数値を渡すタイプはScrapyかrequests&BS4を使用して、URLに変化が無いタイプはSeleniumを使用する。

I use Scrapy or requests and BS4 for the type that passes a variables to parameters, and Selenium for the type that does not change the URL.

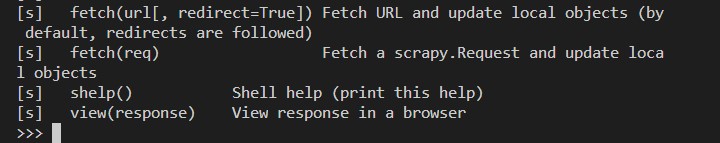

試しにScrapyとScrapy Shellを利用して様子をみる。

I'll give it a try with Scrapy and the Scrapy Shell.

mkdir case003

cd case003

python -m venv py37

source py37/bin/activate #mac

py37\Scripts\activate.bat #win

pip install Scrapy

scrapy shell URL

Shell(対話型)が立ち上がったら、取り敢えずパラメーターに変数を渡してみる。

Once the Shell (interactive) is up, I'll try passing the variable to the parameter.

fetch("URL?param=xx¶m=yy")

header = response.xpath('//th/text()').extract()

header = [h.strip() for h in header if h.strip() != '']

片道切符で、羽田から新千歳までの料金を検索。Table構造になっていたので、ヘッダーとコンテンツを取得する。

I searched the fare from Haneda to Shin-chitose by one-way ticket. Because of the Table structure, I get the header and content.

>>> header ['便名', '出発/到着', '座席', '割引運賃', '変更可能運賃']

問題発生

A problem has occurred

headerは問題無く取れました。が、しかし、contentsが取れません。

I could get the header without any problem. However, the contents could not be taken.

contents = response.xpath('//div[@class="active target_table"]/table')

>>>contents []

試しにHTMLをファイルに出力してみます。

I decided to try printing html to a TEXT file.

>>> with open('response.html','w') as f:

... f.write(response.text)

<!-- ここから往路のコード ---------------------------------------------------->

<div id="ouro" class="active target_table"></div>

<!-- ここから復路のコード ---------------------------------------------------->

<div id="fukuro" class=" target_table"></div>

実際の表示されているソースコードはこうなっている。

Here's the actual source code.

<!-- ここから往路のコード ---------------------------------------------------->

<div id="ouro" class="active target_table">

...................................................................

...................................................................

...................................................................

<table class="flight_table sorting_row category_display_row " data-airline="ANA">

<tbody><tr>

<td class="flightname">

<p>ANA 065</p>

<img src="https://cdn.hogehogehogehoge">

<p class="mile-icon"><img src="https://cdn.hogehogehogehoge"><span>マイルもたまる</span></p>

</td>

<td class="dep_dest sorting_column">

<div class="departure_cell">

羽田<br>

<span class="target_data dep_des_time">13:00</span><br>

</div>

<div class="destination_cell">

新千歳<br>

<span class="dep_des_time">14:35</span><br>

</div>

</td>

<td class="seat_type">

普通席

</td>

.............................................................................

.............................................................................

.............................................................................

.............................................................................

.............................................................................

.............................................................................

.............................................................................

.............................................................................

</tbody></table></div>

<!-- ここから復路のコード ---------------------------------------------------->

<div id="fukuro" class=" target_table"></div>

どうやら動的に検索結果を表示しているようです。

It appears to be dynamically diaplaying search results.

我、Selenium中毒者なり。

I am a Selenium addict.

APIに組み込み辛い。並列して他の処理をし辛い。 コードが非常に泥臭くなる。 てか、元々スクレイピング用では無い。 だが、我々はSeleniumを求めている!

Selenium, It's hard to integrate it into the API. It is hard to do other processing in parallel. The code becomes very ugly. It is not intended for scraping. But we want Selenium!

JSは麻薬

途方に暮れた

Scrapingを静かに溶かす

舞い上がる

WEBを踊らせて

震えるBrowserを

記憶のPyにつつむ

I keep my Code for Crawling to the web site

Endless Selenium

Fall on my Script

心の傷に

Let me forget.

all of the AJAX,

all of the Requests.

おふざけはここまでだよ!40秒でクロールしな!

That's it for jokes! Crwal in 40 seconds!

その前に仮想環境のPythonを消去します。すまない!Py37!

Before that, clear Python from the virtual environment. I'm so sorry! Py37!

>>> exit()

deactivate #mac

py37\Scripts\deactivate.bat #win

rm -r py37

rm response.html

python -m venv py37 #復活!

pip install selenium

mkdir site001

mkdir site001/data #mac

mkdir site001\data #win

cd site001

echo # wonderful spam! > collect.py

眠りに落ちる前に一つのサイトだけ片付けてしまおう!

Let's clean up one site before I fall asleep!

collect.py

# wonderful spam!

from selenium.webdriver.support.ui import WebDriverWait

from selenium.webdriver.support import expected_conditions as EC

from selenium.webdriver.common.by import By

from selenium.webdriver.common.keys import Keys

import csv

import time

from datetime import datetime

import os,sys

sys.path.append('./site001/scripts')

import collect_utils

# if you want to use choromedriver_binary

# import chromedriver_binary

# chromedriver_binary.add_chromedriver_to_path()

class Collect():

def __init__(self, url, way):

self.base_url = url

self.way = way

def close_modal(self,driver):

try:

driver.find_element_by_xpath('//div[@class="modal-dialog"]/div[@class="modal-content"]/div[@class="modal-header"]').click()

except:

print('element not interactable')

def switch_ways(self, driver, way_num):

"""

way_num:0 = roundtrip

way_num:1 = one-way

way_num:2 = excursion

"""

try:

WebDriverWait(driver,30).until(EC.element_to_be_clickable((By.XPATH,'//ul[@class="ways_soratabi clearfix"]/li[1]')))

except:

print('element click intercepted')

driver.quit()

ways = driver.find_elements_by_xpath('//ul[@class="ways_soratabi clearfix"]/li')

way = ways[way_num]

driver.execute_script("arguments[0].click()", way)

def page_feed(self,driver, end_date):

current_date = driver.find_element_by_xpath('//button[@class="btn_weekly today"]')

cyear = current_date.get_attribute('year')

cmonth = current_date.get_attribute('month')

cday = current_date.get_attribute('day')

if f'{cyear}/{cmonth}/{cday}' == end_date:

return True

else:

parent_li = current_date.find_element_by_xpath('..')

sibl_li = parent_li.find_element_by_xpath('following-sibling::li')

sibl_li.find_element_by_xpath('button[@class="btn_weekly"]').click()

return False

def fetch_table_header(self, driver):

header_element = driver.find_elements_by_xpath('//div[@id="flight_table_holder"]/table/tbody/tr/th')

table_header = [e.text for e in header_element if e.text != '']

return table_header

def fetch_table_contents(self, driver, table_header):

try:

WebDriverWait(driver,6).until(EC.visibility_of_element_located((By.XPATH,'//div[@id="ouro"]/table')))

except:

print('empty contents')

# See if it's empty

content_elements = driver.find_elements_by_xpath('//div[@id="ouro"]/table')

if content_elements != []:

table_contents = [{table_header[idx]:td.text.replace('\n','->') for idx,td in enumerate(c.find_elements_by_tag_name('td'))} for c in content_elements]

return table_contents

else:

return None

def collect_price(self, driver, current_url, way_num):

# Load a file with specified conditions.

rows = collect_utils.read_file()

for row in rows:

try:

WebDriverWait(driver,30).until(EC.visibility_of_element_located((By.XPATH,'//select[@id="ID_F1Departure"]')))

except:

print('element invisible')

driver.quit()

# Enterring the data of the imported file.

dep_box = driver.find_element_by_xpath('//select[@id="ID_F1Departure"]')

replace_btn = driver.find_element_by_xpath('//li[@class="flight_replace replace_btn"]')

des_box = driver.find_element_by_xpath('//select[@id="ID_F1Destination"]')

dep = row['dep']

des = row['des']

# Departure locations

driver.execute_script("arguments[0].click()", dep_box)

driver.find_element_by_xpath(f'//li[@data-airport_name="{dep}"]').click()

# Destination locations

driver.execute_script("arguments[0].click()", des_box)

time.sleep(1)

driver.find_element_by_xpath(f'//span[@id="des_list"]/ul/li[@data-airport_name="{des}"]').click()

time.sleep(1)

if way_num == 0:

# dep-date

dep_date = driver.find_element_by_xpath(f'//li[@class="flight_date to-date"]/span[@class="from-trigger"]/span[@class="text-dep-date"]')

# des-date

des_date = driver.find_element_by_xpath(f'//li[@class="flight_date returnDay"]/span[@class="to-trigger"]/span[@class="text-des-date"]')

else:

# dep-date

dep_date = driver.find_element_by_xpath(f'//li[@class="flight_date to-date"]/span[@class="from-trigger"]/span[@class="text-dep-date"]')

# set date

start = row['start']

end = row['end']

start_date_check = collect_utils.check_date(start)

end_date_check = collect_utils.check_date(end)

if start_date_check:

start = start.replace('/','/')

else:

start = collect_utils.get_date()[0]

if end_date_check:

end = end.replace('/','/')

else:

end = collect_utils.get_date()[1]

if way_num == 0:

# enter departure date

driver.execute_script(f"arguments[0].innerHTML = '{start}'", dep_date)

# enter destination date

driver.execute_script(f"arguments[0].innerHTML = '{end}'", des_date)

else:

# enter departure date

driver.execute_script(f"arguments[0].innerHTML = '{start}'", dep_date)

# click search button

driver.find_element_by_xpath('//input[@class="search_button"]').click()

# fetch table header

table_header = self.fetch_table_header(driver)

date_obj = datetime.now()

dir_date = datetime.strftime(date_obj,'%Y%m%d')

if way_num == 0:

dir_name = f'往復-{dep}-{des}-{dir_date}'

else:

dir_name = f'片道-{dep}-{des}-{dir_date}'

if os.path.exists(f'site001/data/{dir_name}'):

pass

else:

os.mkdir(f'site001/data/{dir_name}')

while True:

current_date_text = driver.find_element_by_xpath('//div[@id="showSearchBoxButton"]').text

current_date = current_date_text.replace('\u3000|\u3000','-').replace(' - ','-').replace(' (','(').replace('/','-')

current_date = f"{current_date.split('-')[2]}-{current_date.split('-')[3]}"

if way_num == 0:

endsplit = end.split('/')

file_name = f"{dir_name}/{current_date}_{endsplit[1]}-{endsplit[2]}"

else:

file_name = f'{dir_name}/{current_date}'

table_contents = self.fetch_table_contents(driver, table_header)

if table_contents is not None:

if self.page_feed(driver, end):

if way_num == 0:

endsplit = end.split('/')

last_day = int(endsplit[-1]) + 1

end = f'{endsplit[1]}-{last_day}'

file_name = f"{dir_name}/往復-{current_date}_{end}"

collect_utils.write_file(file_name, table_header, table_contents)

break

else:

collect_utils.write_file(file_name, table_header, table_contents)

else:

if self.page_feed(driver, end):

break

else:

pass

driver.get(current_url)

driver.quit()

def main(self):

driver = collect_utils.set_web_driver(self.base_url)

self.close_modal(driver)

way_num = int(self.way)

self.switch_ways(driver, way_num)

current_url = driver.current_url

self.collect_price(driver, current_url, way_num)

取り敢えず収集役のClassファイルのみ制作。

First of all, I made only the Class file of the collector.

汎用性を作るための合体作業とコードの説明は次回です。

Next time, I'll discuss the merging work and code to create versatility.

See You Next Page!